1. What is Kubernetes?

Kubernetes is an open-source container orchestration or in simple words we can say container management tool, developed by Google. It automates many of the manual processes involved in deploying, managing, and scaling containerized applications.

2. Why do we call it k8s

Many IT people Prefer to use numeronym forms, shortening the words. The shortening is mainly done by keeping the first and the last letters of the word and adding the total number of letters contained in the word.

So in the case of Kubernetes, Its first letter is “K,” and the last letter is “S.” It is composed of 10 words, but between the first and the last letter, there are 8 additional letters, i.e., “ubernete.” This is where the 8 come from. Combining all of them, we get “K8s.” — a simple yet effective short form of the word Kubernetes.

3. What are the benefits of using k8s?

Kubernetes automates containerized environments:

Nowadays most of the company's choice is containers. Containerization is the concept of packaging code with only the OS, and the required dependencies to create a single executable, the container, which can run on any infrastructure. So, Kubernetes makes containerized environments possible by acting as the orchestration system. Kubernetes automates the operational requirements of running containerized workloads.

Scaling up and down:

With Kubernetes, companies can efficiently scale up and down based on actual demand. Autoscaling is an efficient way to run workloads and can lead to meaningful cost efficiency.

Multi-cloud possibilities:

Because of its portability, Kubernetes workloads can exist in a single cloud or spread across multiple clouds. the majority of major cloud providers have Kubernetes-specific offerings. For example, Amazon’s AWS has EKS, Google’s GCP has GKE, and Microsoft’s Azure has AKS.

Cost efficiencies and savings:

The most popular reason to migrate to Kubernetes was due to the cost efficiencies and savings that are possible. Kubernetes has autoscaling capabilities that allow companies to scale up and down the number of resources they are using in real time. When paired with a flexible cloud provider, Kubernetes can efficiently use exactly the right amount of resources based on demand at given points in time.

Ability to run anywhere:

With Kubernetes, you can use nearly every container runtime with nearly any type of infrastructure. For large organizations with complex and variable infrastructure environments, Kubernetes can be used across these environments at scale, whereas, other container orchestration systems are typically stuck with a small number of options.

4. Explain the architecture of Kubernetes

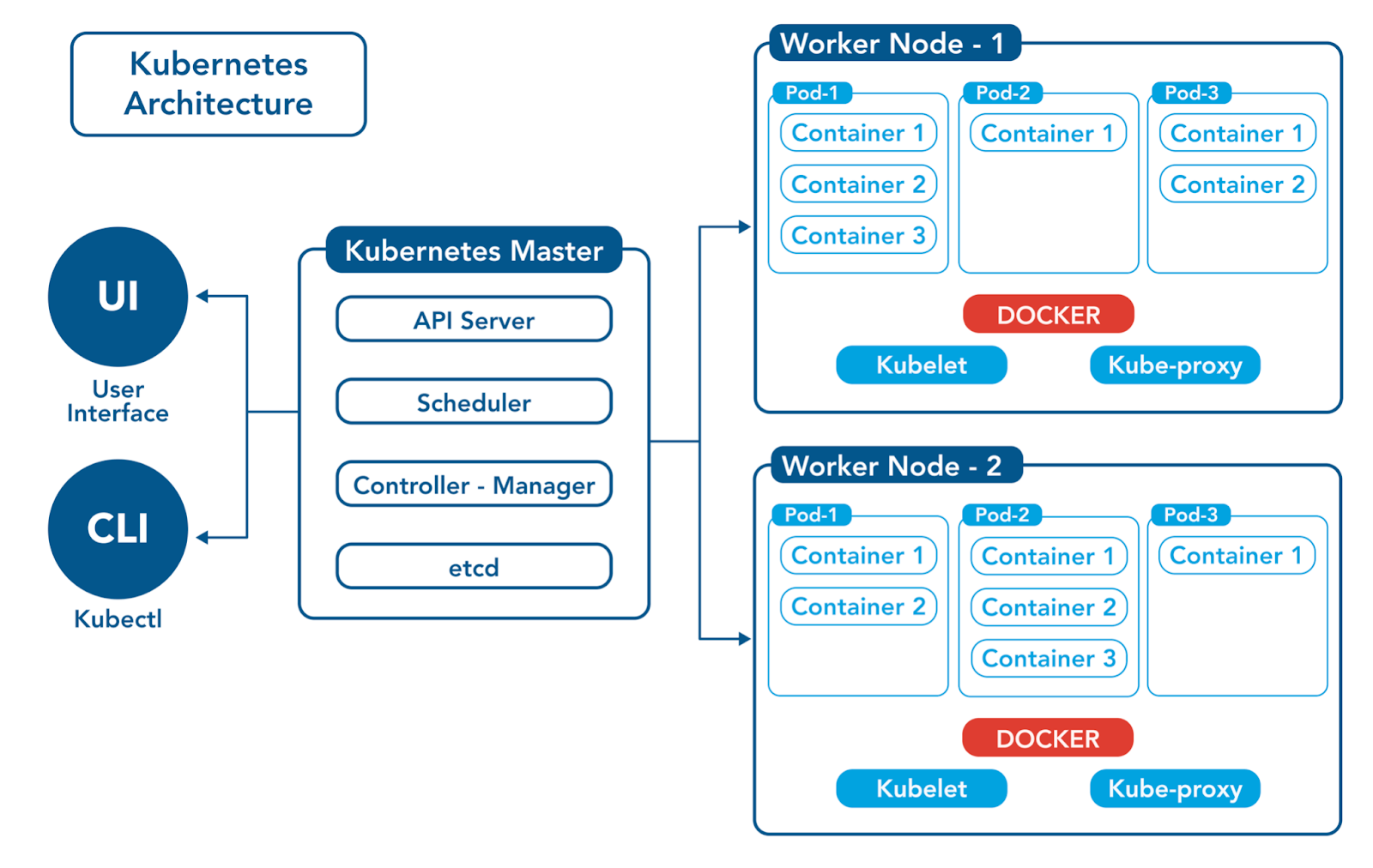

Kubernetes follows a client-server architecture. It’s possible to have a multi-master setup, but by default, there is a single master server that acts as a controlling node and point of contact.

Master Node Components:

etcd cluster: A distributed key-value store that is used to store the Kubernetes cluster data (such as several pods, their state, namespace, etc), API objects, and service discovery details. It is only accessible from the API server for security reasons.

kube-scheduler: helps schedule the pods (a group of containers inside which our application processes are running) on the various nodes based on resource utilization. It reads the service’s operational requirements and schedules it on the best-fit node.

kube-apiserver: It is the main component of a Master node that receives requests for modifications(to pods, services, replication sets/controllers, and others) serving as a frontend to the cluster. This is the only component that communicates with the etcd cluster, making sure data is stored in etcd.

kube-controller manager: it runs continuously and watches the actual and desired state of objects. If there is a difference in the actual and desired state, it ensures that the Kubernetes resource/object is in the desired state.

Worker Node Components:

kubelet: An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

kube-proxy: kube-proxy is a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service.

Kube-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

Container runtime: The container runtime is the software that is responsible for running containers.

Kubernetes supports container runtimes such as docker, containers, CRI-O, etc.

What is Kubectl?

Kubernetes provides a command line tool for communicating with a Kubernetes cluster's control plane, using the Kubernetes API, this tool is kubectl.

5. Write the difference between kubectl and kubelet?

kubectl is the command-line interface (CLI) tool that connects to a Kubernetes cluster to read, create, and modify Kubernetes resources (like pod, deployment, and services)

Kubelet is the technology that applies, creates, updates, and destroys containers on a Kubernetes node. Kubelet is a part of the Kubernetes cluster (server).

6. Explain the role of the API server.

The Kubernetes API is the main component of the Kubernetes control plane. We can create, modify, and delete primitive elements within our Kubernetes clusters through the API. The API also enables different components within our clusters to communicate and also it facilitates interactions with external components. If you use the kubectl or kubeadm tool, then indirectly invoke the API through those command-line tools.

For more details, you can check this link: https://spacelift.io/blog/kubernetes-architecture

Thank You For Reading :)

![Introduction To Kubernetes[Day-29 Task]](https://cdn.hashnode.com/res/hashnode/image/upload/v1698873208097/071f7767-197d-451f-9f5e-547ad7f28981.png?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)